The hardware and bandwidth for this mirror is donated by METANET, the Webhosting and Full Service-Cloud Provider.

If you wish to report a bug, or if you are interested in having us mirror your free-software or open-source project, please feel free to contact us at mirror[@]metanet.ch.

The R package Artificial Intelligence for Education (aifeducation) is designed for the special requirements of educators, educational researchers, and social researchers. The target audience of this package are educators and researchers with no coding skills who would like to develop their own models, as well as people who would like to use those models created by other researchers/educators. The package supports the application of Artificial Intelligence (AI) for Natural Language Processing tasks such as text embedding and classification under the special conditions of the educational and social sciences.

Currently, the package focuses on classification tasks which can either be used to diagnose characteristics of learners from written material or to estimate the properties of learning and teaching material. In the future, more tasks will be implemented.

You can install the latest stable version of the package from CRAN with:

install.packages("aifeducation")You can install the development version of aifeducation from GitHub with:

install.packages("devtools")

devtools::install_github(repo="FBerding/aifeducation",

ref="master",

dependencies = "Imports")Further instructions for installation can be found in vignette 01 Get Started.

Please note that an update of your version of aifeducation may require an update of your python libraries. Refer to 01 Get Started for more details.

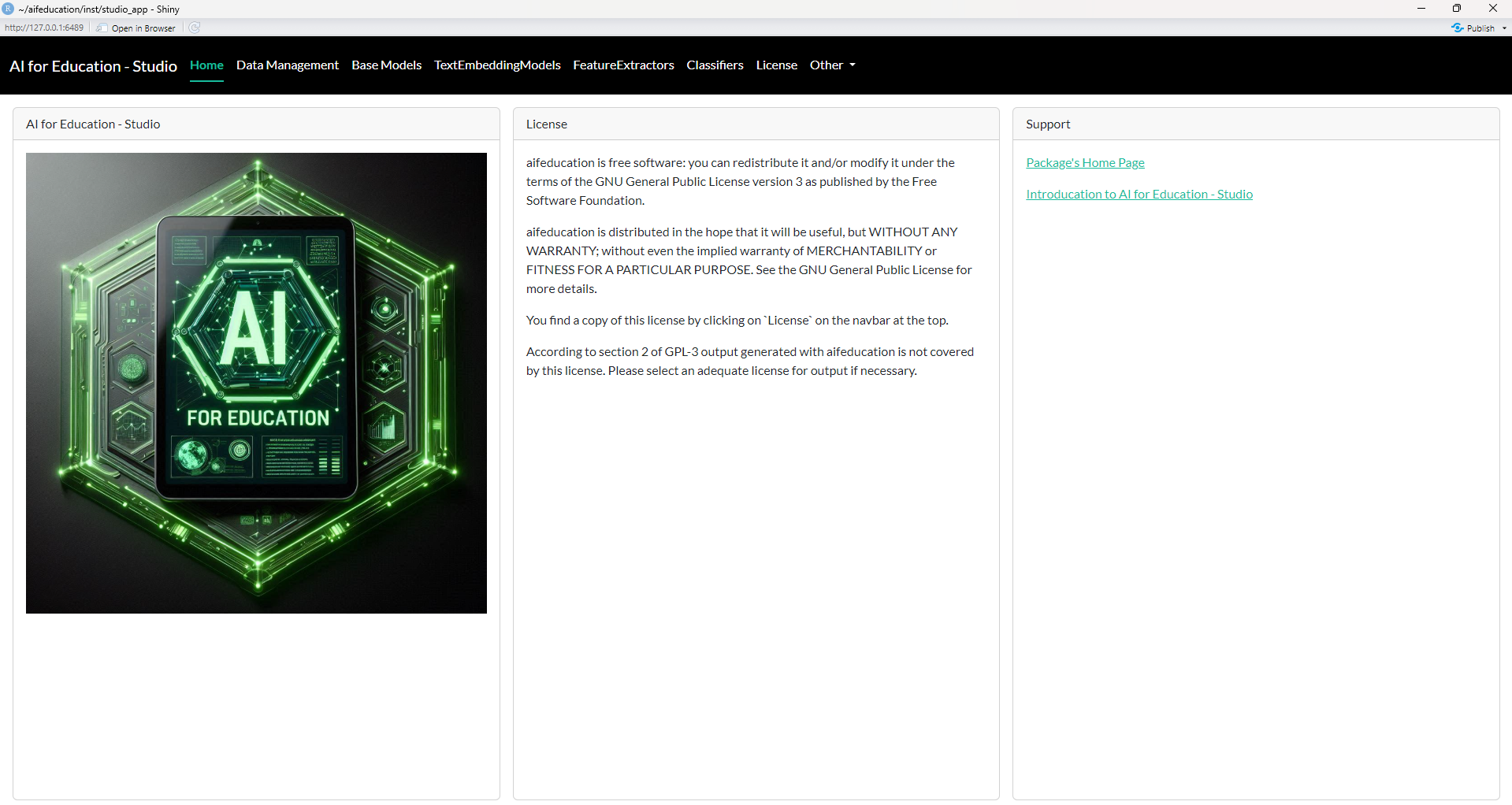

The package ships with a shiny app that serves as a graphical user interface.

AI for Education - Studio allows users to easily develop, train, apply, document, and analyse AI models without any coding skills. See the corresponding vignette for more details: 02 Using the graphical user interface Aifeducation - Studio.

Training AI models consumes time and energy. To help researchers estimate the ecological impact of their work, a sustainability tracker is implemented. It is based on the python library ‘codecarbon’ by Courty et al. (2023). This tracker allows to estimate the energy consumption for CPUs, GPUs and RAM during training and derives a value for CO2 emission. This value is based on the energy mix in the country where the computer is located.

The core machine learning framework of this package is ‘PyTorch’, providing a broad support of graphical devices to accelerate computations, access to new and unique model architectures, and a high compatibility of models across different versions of this machine learning framework.

Research requires reproducibility and traceability. Thus, starting with version 1.0.0 of this package, it has top priority to ensure that already trained models work with future versions of this package.

Classification tasks require the transformation of raw texts into a representation with numbers. For this step, aifeducation supports new approaches such as modernBERT (Warner et al. 2024), MPNet (Song et al. 2020), BERT (Devlin et al. 2019), RoBERTa (Liu et al. 2019), and Funnel-Transformer (Dai et al. 2020).

aifeducation supports the use of pre-trained transformer models provided by Hugging Face and the creation of new transformers, allowing educators and researchers to develop specialized and domain-specific models. See 04 Model configuration and training for details about the configuration of a new model.

The package supports the analysis of long texts. Depending on the method, long texts are transformed into vectors at once, or, if too long, are split into several chunks which results in a sequence of vectors.

For the second step within a classification task, aifeducation integrates some important statistical and mathematical methods for dealing with the main challenges in educational and social sciences for applying AI. These are:

In order to deal with the problem of imbalanced data sets, the package integrates the Synthetic Minority Oversampling Technique into the learning process. Currently, the K-Nearest Neighbor OveRsampling Approach (KNNOR) developed by Islam et al. (2022) is available in fast C++. This approach reached high performance across different tasks and data sets compared to other techniques (Islam et al. 2022).

In order to address the problem of small data sets, training loops of AI integrate pseudo-labeling (e.g., Lee 2013). Pseudo-labeling is a technique which can be used for supervised learning. More specifically, educators and researchers rate a part of a data set and train AI with this very part. The remainder of the data is not processed by humans. Instead, AI uses this part of data to learn on its own. Thus, educators and researchers only have to provide additional data for the AI’s learning process without coding it themselves. This offers the possibility to add more data to the training process and reduce labor cost.

Classification tasks in machine learning are comparable to the empirical method of content analysis from the social sciences. This method looks back on a long research tradition and an ongoing discussion on how to evaluate the reliability and validity of generated data. In order to provide a link to this research tradition and to provide educators as well as educational and social researchers with performance measures they are more familiar with, every AI trained with this package is evaluated with the following measures and concepts:

In addition, some traditional measures from machine learning literature are also available:

Since the package is based on ‘PyTorch’ and the transformer library, every trained AI can be shared with other educators and researchers. The package supports an easy use of pre-trained AI within R, but also provides the possibility to export trained AI to other environments.

Using a pre-trained AI for classification only requires the classifier and the corresponding text embedding model. Use AI for Education Studio or just load both to R and start predictions. Vignette 02 Using the graphical user interface Aifeducation - Studio describes how to use the user interface. Vignette 03 Using R syntax describes how to save and load the objects with R syntax. In vignette 05 Sharing and Using Trained AI/Models you can find a detailed guide on how to document and share your models.

Berding, F., & Pargmann, J. (2022). Iota Reliability Concept of the Second Generation. Berlin: Logos. https://doi.org/10.30819/5581

Berding, F., Riebenbauer, E., Stütz, S., Jahncke, H., Slopinski, A., & Rebmann, K. (2022). Performance and Configuration of Artificial Intelligence in Educational Settings.: Introducing a New Reliability Concept Based on Content Analysis. Frontiers in Education, 1-21. https://doi.org/10.3389/feduc.2022.818365

Bloemen, A. (2011). Lernaufgaben in Schulbüchern der Wirtschaftslehre: Analyse, Konstruktion und Evaluation von Lernaufgaben für die Lernfelder industrieller Geschäftsprozesse. Hampp.

Cohen, J (1968). Weighted kappa: Nominal scale agreement with provision for scaled disagreement or partial credit. Psychological Bulletin, 70(4), 213–220. https://doi.org/10.1037/h0026256

Cohen, J (1960). A Coefficient of Agreement for Nominal Scales. Educational and Psychological Measurement, 20(1), 37–46. https://doi.org/10.1177/001316446002000104

Courty, B., Schmidt, V., Goyal-Kamal, Coutarel, M., Feld, B., Lecourt, J., & … (2023). mlco2/codecarbon: v2.2.7. https://doi.org/10.5281/zenodo.8181237

Dai, Z., Lai, G., Yang, Y. & Le, Q. V. (2020). Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing. https://doi.org/10.48550/arXiv.2006.03236

Devlin, J., Chang, M.‑W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In J. Burstein, C. Doran, & T. Solorio (Eds.), Proceedings of the 2019 Conference of the North (pp. 4171–4186). Association for Computational Linguistics. https://doi.org/10.18653/v1/N19-1423

Fleiss, J. L. (1971). Measuring nominal scale agreement among many raters. Psychological Bulletin, 76(5), 378–382. https://doi.org/10.1037/h0031619

Gruber, N., & Kreuzpointner, L. (2013). Measuring the reliability of picture story exercises like the TAT. PloS One, 8(11), e79450. https://doi.org/10.1371/journal.pone.0079450

Gwet, K. L. (2014). Handbook of inter-rater reliability: The definitive guide to measuring the extent of agreement among raters (Fourth edition). STATAXIS.

Haixiang, G., Yijing, L., Shang, J., Mingyun, G., Yuanyue, H., & Bing, G. (2017). Learning from class-imbalanced data: Review of methods and applications. Expert Systems with Applications, 73, 220–239. https://doi.org/10.1016/j.eswa.2016.12.035

He, P., Liu, X., Gao, J. & Chen, W. (2020). DeBERTa: Decoding-enhanced BERT with Disentangled Attention. https://doi.org/10.48550/arXiv.2006.03654

Islam, A., Belhaouari, S. B., Rehman, A. U. & Bensmail, H. (2022). KNNOR: An oversampling technique for imbalanced datasets. Applied Soft Computing, 115, 108288. https://doi.org/10.1016/j.asoc.2021.108288

Krippendorff, K. (2019). Content Analysis: An Introduction to Its Methodology (4th Ed.). SAGE.

Lee, D.‑H. (2013). Pseudo-Label: The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks. CML 2013 Workshop: Challenges in Representation Learning.

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Zettlemoyer, L., & Stoyanov, V. (2019). RoBERTa: A Robustly Optimized BERT Pretraining Approach. https://doi.org/10.48550/arXiv.1907.11692

Luan, H., Geczy, P., Lai, H., Gobert, J., Yang, S. J. H., Ogata, H., Baltes, J., Guerra, R., Li, P., & Tsai, C.‑C. (2020). Challenges and Future Directions of Big Data and Artificial Intelligence in Education. Frontiers in Psychology, 11, 1–11. https://doi.org/10.3389/fpsyg.2020.580820

Song, K., Tan, X., Qin, T., Lu, J. & Liu, T.‑Y. (2020). MPNet: Masked and Permuted Pre-training for Language Understanding. https://doi.org/10.48550/arXiv.2004.09297

Stütz, S., Berding, F., Reincke, S., & Scheper, L. (2022). Characteristics of learning tasks in accounting textbooks: an AI assisted analysis. Empirical Research in Vocational Education and Training, 14(1). https://doi.org/10.1186/s40461-022-00138-2

Warner, B., Chaffin, A., Clavié, B., Weller, O., Hallström, O., Taghadouini, S., Gallagher, A., Biswas, R., Ladhak, F., Aarsen, T., Cooper, N., Adams, G., Howard, J. & Poli, I. (2024). Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference. https://doi.org/10.48550/arXiv.2412.13663

Wong, J., Baars, M., Koning, B. B. de, van der Zee, T., Davis, D., Khalil, M., Houben, G.‑J., & Paas, F. (2019). Educational Theories and Learning Analytics: From Data to Knowledge. In D. Ifenthaler, D.-K. Mah, & J. Y.-K. Yau (Eds.), Utilizing Learning Analytics to Support Study Success (pp. 3–25). Springer. https://doi.org/10.1007/978-3-319-64792-0_1

These binaries (installable software) and packages are in development.

They may not be fully stable and should be used with caution. We make no claims about them.